Smart Displays with Generative AI-Driven Voice Intelligence

Powering 10,000+ smart displays with a GenAI-driven voice assistant that delivers real-time control, regional language intelligence, and sub-2-second responses.

Powering 10,000+ smart displays with a GenAI-driven voice assistant that delivers real-time control, regional language intelligence, and sub-2-second responses.

The global smart display market is forecasted to reach around USD 59.38 billion by 2034, growing at a CAGR of 20.47% from 2025 to 2034. The increasing integration of voice assistants drives this growth, the adoption of smart home technologies, and the growing use of interactive displays.

This case study discusses Azilen’s collaboration with one of its clients to develop a collaborative GenAI Smart Control Solution that can be operationalized across over 10,000 smart display devices simultaneously.

Let’s discover some details about this smart display ecosystem with a GenAI-powered virtual assistant that provides language support for Brazilian Portuguese, Argentinian Spanish, and Mexican Spanish with sub-2-second latency for the client’s target market in the LATAM region.

Headless Voice Intelligence Engine

LLM-based Voice Command Intent Recognition

OpenAI Whisper Model for Speech Recognition

TV-specific Knowledge Base for Virtual Assistant

3rd Party Integrations – Weather, News, IMDb & Wikipedia

LLM & ASR Model Hosting with AWS Bedrock

The client is a dedicated visual digital entertainment company committed to developing, manufacturing, marketing, and supporting well-known branded TV, Smart Display, and Audio products across Europe, LATAM, and APMEA regions.

Azilen engaged with the client to blueprint the envisioned concept from idea to execution with a voice AI solution that assists smart display users as a virtual smart assistant with a TV-specific knowledge base and language-specific adaptation for Brazilian Portuguese, Argentinian Spanish, and Mexican Spanish.

Smart displays powered globally

Multilingual accuracy supporting LATAM regional dialects

Concurrent monthly voice interactions supported

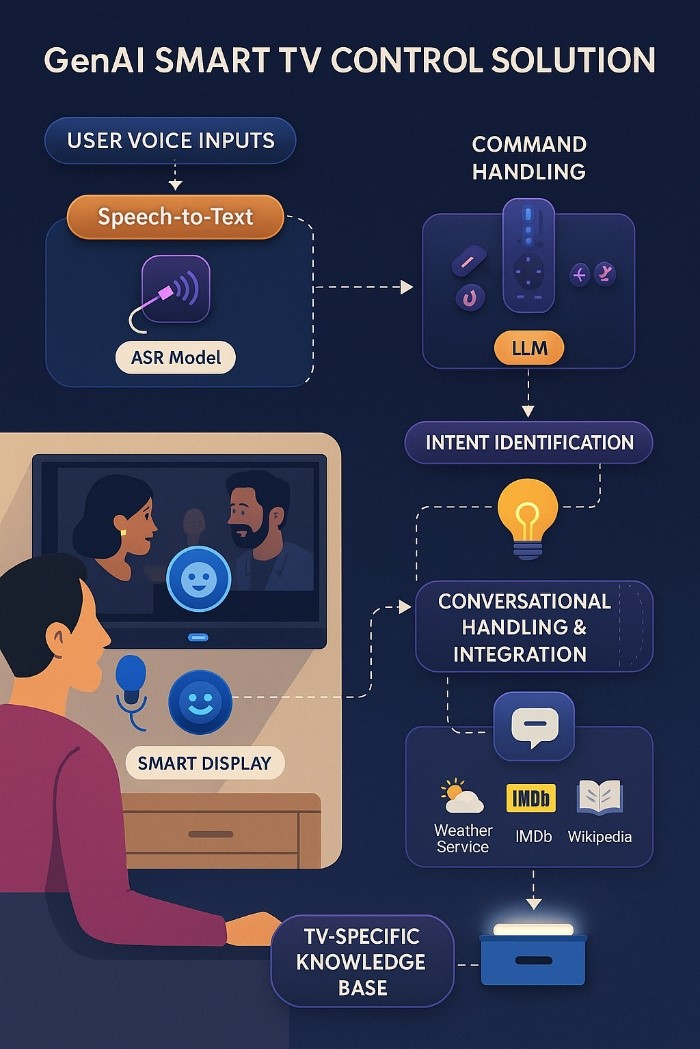

The generative AI-powered voice assistant for smart displays enables seamless processing of voice interactions—from user speech input on smart displays to intelligent command execution and contextual response delivery—all orchestrated through AWS IoT Core and developed backend microservices.

➡️ User activates the microphone on the smart display using a remote.

➡️ The microphone captures the voice input and converts it into an audio file.

➡️ The audio file is securely transferred to AWS IoT Core through MQTT.

➡️ The MQTT Data Listener triggers the ASR model.

➡️ The Whisper-based Automated Speech Recognition service processes the audio input.

➡️ Converts the captured speech into transcribed text, with language translation if required.

➡️ The transcribed text is forwarded to the backend services for further processing.

The evolution of smart display ecosystems marks a shift from command-driven to conversational interfaces—where context, emotion, and personalization define engagement.

Azilen’s approach demonstrates how Generative AI, when integrated with asynchronous IoT frameworks, can transform everyday devices into adaptive digital companions.

The solution exemplifies a blueprint for future-ready entertainment systems that learn, respond, and evolve with user behavior.

➡️ The backend Intent Recognition Service, powered by an LLM on Amazon Bedrock, analyses the transcribed text.

➡️ Determines whether the user request represents a Command or a Conversation.

➡️ Acts as the central routing logic for subsequent services.

➡️ Triggered when the intent is identified as a device command (e.g., volume control, mute, channel change).

➡️ The Command Handling module processes the instruction and interfaces with device functions.

➡️ Generates a structured command response for execution on the smart display.

➡️ Triggered for general conversational queries.

➡️ Routes requests through an Integration Service connected to third-party APIs such as: Weather | IMDb | News | Wikipedia

➡️ Uses asynchronous communication and caching for optimized response delivery.

➡️ Implemented with the Cache-Augmented Generation (CAG) mechanism.

➡️ Retrieves verified product knowledge, manuals, and FAQs stored in AWS S3.

➡️ Generates concise and user-friendly responses for product or troubleshooting queries.

➡️ The final response—whether a command confirmation or conversational reply—is published back to AWS IoT Core via MQTT.

➡️ Delivered to the smart display and rendered through the built-in Text-to-Speech (TTS) engine.

➡️ Conducted research and workshops to choose the best ASR and LLM models with the right mix of accuracy, cost efficiency, and performance.

➡️ Trained the Whisper model with a step-by-step approach using regional voice data to lower word error rates and improve local dialect accuracy.

➡️ Regular testing, feedback, and benchmarking maintained high model quality and reliable performance.

We are deeply committed to translate your product vision into product value with our dedication to delivering nothing less than excellence.

"*" indicates required fields

5432 Geary Blvd, Unit #527 San Francisco, CA 94121 United States

320 Decker Drive Irving, TX 75062 United States

6d-7398 Yonge St,1318 Thornhill, Ontario, Canada, L4J8J2

71-75 Shelton Street, Covent Garden, London, United Kingdom, WC2H 9JQ

Hohrainstrasse 16, 79787 Lauchringen, Germany

12, Zugerstrasse 32, 6341 Baar, Switzerland

5th floor, Bloukrans Building, Lynnwood Road, Pretoria, Gauteng, 0081, South Africa

12th & 13th Floor, B Square-1, Bopal – Ambli Road, Ahmedabad – 380054

B/305A, 3rd Floor, Kanakia Wallstreet, Andheri (East), Mumbai, India