Do you recall the wave of Service-Oriented Architecture (SOA) in the 2010s?

Companies like IBM, Microsoft, and Oracle were experimenting with SOA, and thought leaders such as Thomas Erl were showing how to break silos and make systems communicate.

A few years later, microservices and containerization became the next wave. Pioneers like Netflix and Amazon showed how modular, independently deployable services could scale globally, and tools like Docker and Kubernetes made it accessible to enterprises.

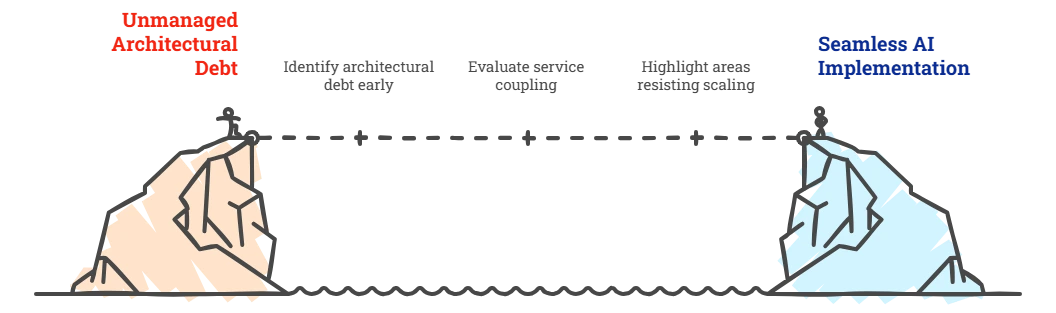

But even then, hidden coupling, complex integrations, and stubborn data flows quietly added new complexity.

This leads to one of the hard-to-digest realities:

Today, the story continues with AI.

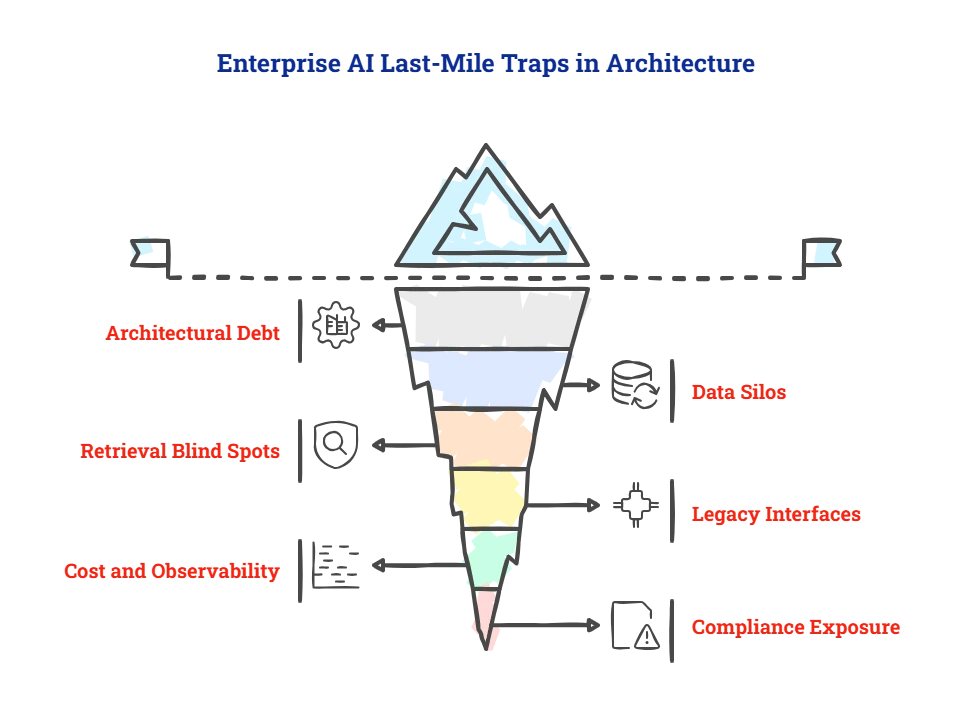

Generative AI, agentic systems, and retrieval pipelines can transform businesses. But when they meet legacy systems, scattered data, and strict compliance rules, the “last mile” shows up.

And that’s the reason: the AI project failure rate is high, with multiple sources citing figures around 80% to 95% (most of them in production).

At Azilen, we call this the architecture moment of truth – the point where AI either bends to enterprise reality or cracks under it. How we design, plan, and integrate around it is what true engineering excellence is all about.

16 mins

16 mins

Talk to Our

Consultants

Talk to Our

Consultants Chat with

Our Experts

Chat with

Our Experts Write us

an Email

Write us

an Email