Reimagining Industrial Safety with GenAI and Data Engineering

Industrial safety depends on timely action and accurate information.

Our team set out to make both effortless through an AI-powered Industrial Safety Assistant that supports on-ground technicians with instant guidance, fault insights, and preventive measures, all powered by GenAI and Data Engineering.

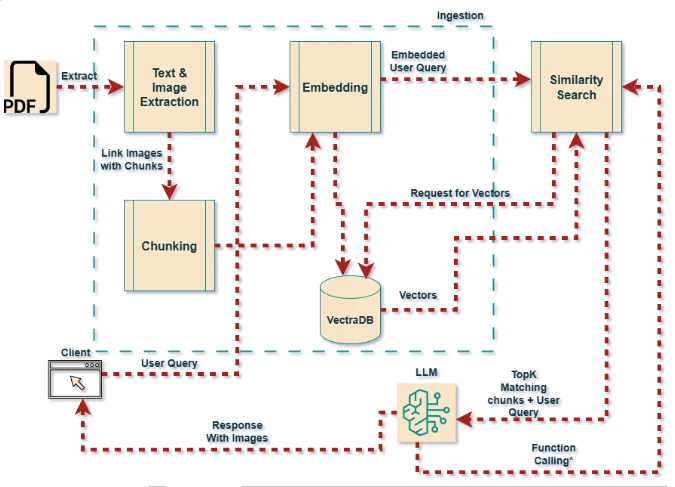

This project combines RAG-based knowledge retrieval, contextual response generation, and real-time operational data – designed to work in fast, high-risk environments where every second matters.

The initiative was driven by a cross-functional team that brought together engineering depth and domain intuition:

→ Dipesh Bhavsar – Technical Project Manager

→ Vipul Makwana – Technical Leader

→ Palak Patel – Scrum Master

→ Ghanshyam Savaliya – Senior Software Engineer II

→ Alpesh Padariya – Senior Software Engineer II

→ Darshit Gandhi – Senior Software Engineer II

→ Swati Babariya – Senior Software Engineer I

→ Rency Ruparelia – Software Engineer

→ Bhavya Thakkar – Associate Software Engineer

→ Jil Patel – Associate Software Engineer

Together, they showcased how industrial safety can evolve from compliance to intelligence, where every query, alert, and recommendation is powered by data awareness and AI reasoning.

6 mins

6 mins

Talk to Our

Consultants

Talk to Our

Consultants Chat with

Our Experts

Chat with

Our Experts Write us

an Email

Write us

an Email