The Evolution of Data Engineering

Data engineering has reinvented itself every decade.

2000s – The ETL era: Tools like Informatica powered structured reporting pipelines.

2010s – The Big Data wave: Hadoop and Spark scaled processing for billions of records.

2015–2020 – The Cloud shift: Snowflake, BigQuery, and Databricks brought elastic pipelines and real-time analytics.

2020–2024 – DataOps and automation: Monitoring and ML-assisted tools improved reliability.

Now in 2025, agentic AI in data engineering is taking us into the next era.

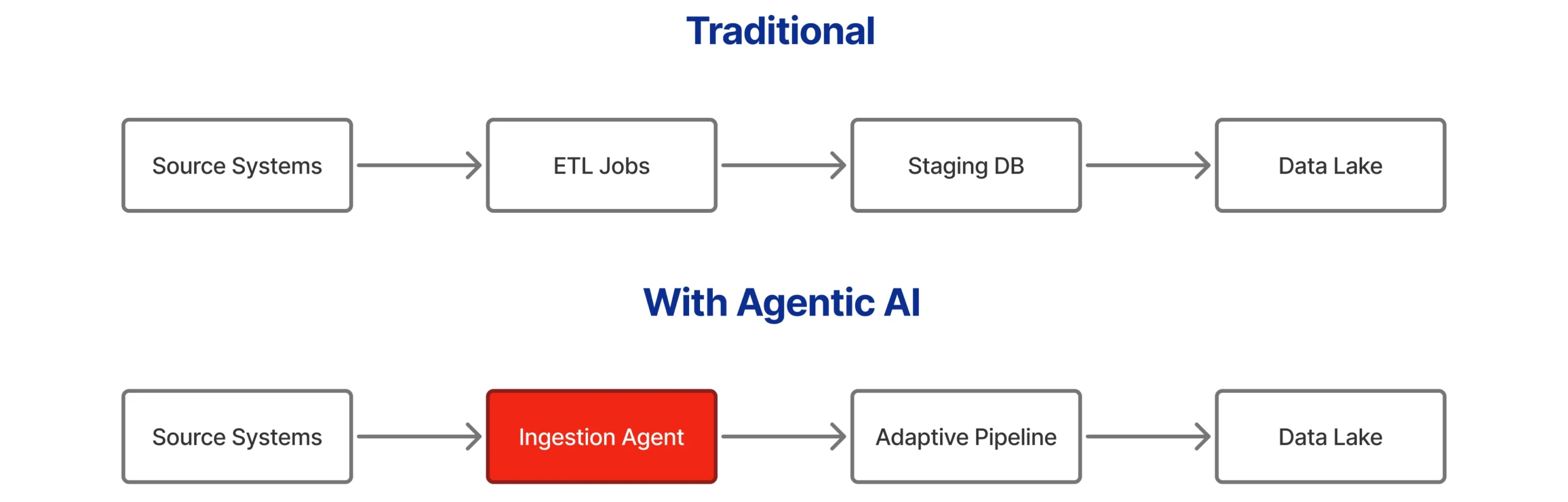

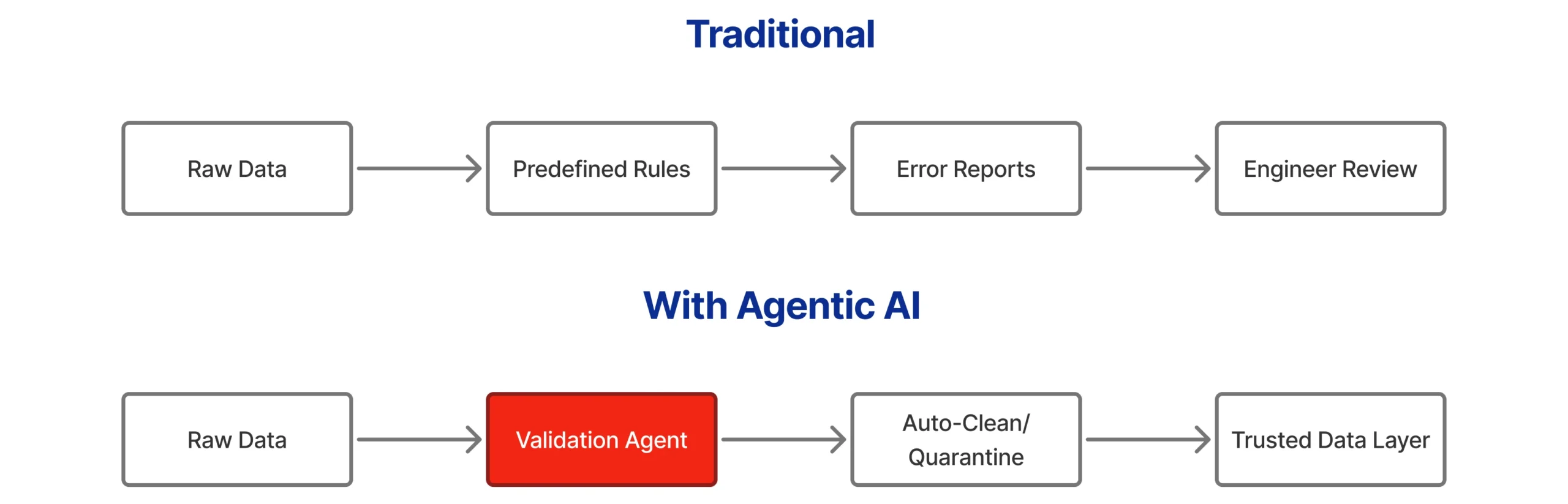

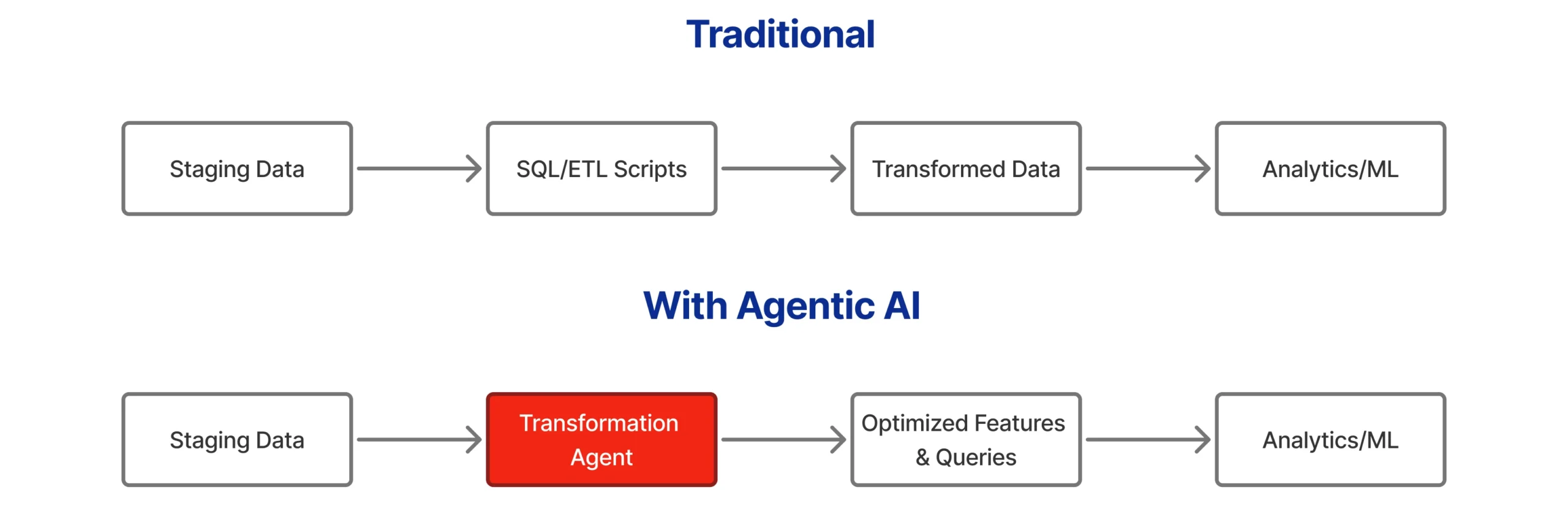

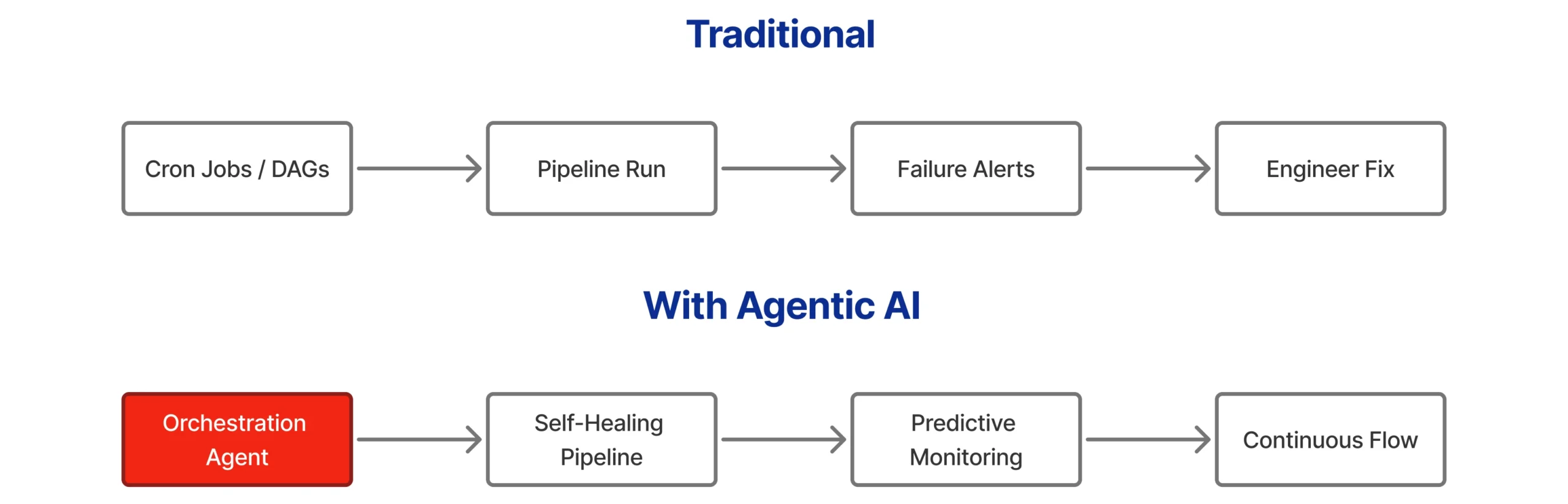

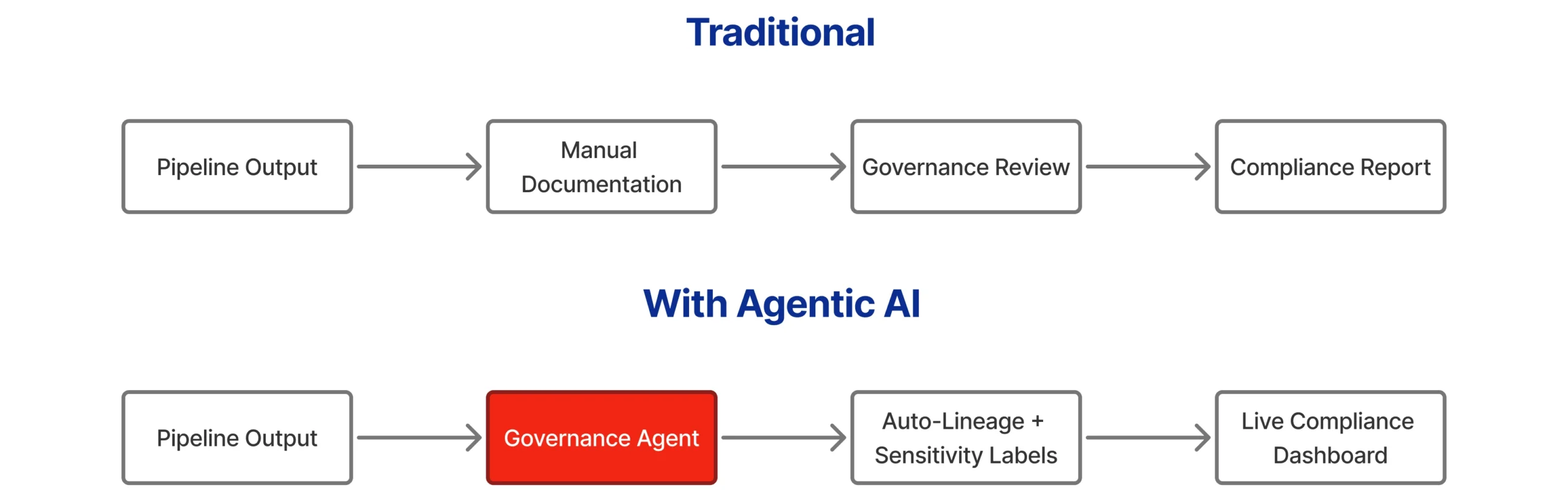

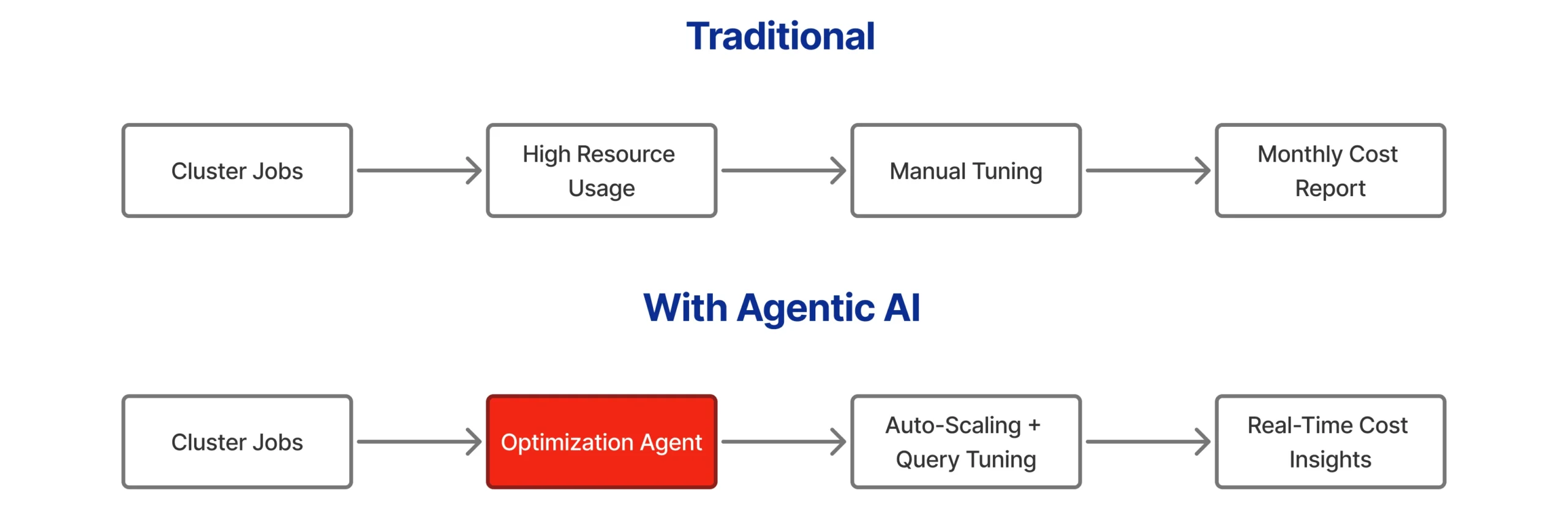

Instead of pipelines that wait for humans to troubleshoot, we have autonomous AI agents that manage, optimize, and govern data flows on their own.

16 mins

16 mins

Talk to Our

Consultants

Talk to Our

Consultants Chat with

Our Experts

Chat with

Our Experts Write us

an Email

Write us

an Email