12 mins

12 mins

Apr 28, 2025

A decade ago, software teams faced the same tension we see in GenAI today, innovation sprinting ahead of operations. Back then, developers were shipping code faster than IT could deploy it. What emerged was DevOps.

A few years later, data science teams ran into their own wall. Models trained beautifully in notebooks refused to behave in production. The response was MLOps.

Now, history is repeating itself. Enterprises are again facing the same operational gap that once gave rise to DevOps and MLOps.

That’s where GenAIOps comes in. It’s the framework that ensures LLMs, vector databases, prompt workflows, and feedback loops run as part of a living system, not scattered pieces of innovation.

At Azilen, we’ve spent years helping enterprises build and operationalize AI systems across HRTech, FinTech, Retail, Healthcare, etc. Through that experience, one lesson stands out: every successful GenAI initiative needs its operational backbone. GenAIOps is that backbone.

In an enterprise setup, GenAIOps acts as the control layer that connects experimentation with production. It brings engineering discipline to how GenAI systems are deployed, monitored, and evolved.

At its core, GenAIOps handles:

→ Data-to-Model Flow: Managing ingestion, preprocessing, embedding refresh cycles, and synchronization with vector databases.

→ Model Lifecycle: Tracking versions, fine-tunes, and deployment metadata for every LLM and sub-component.

→ Prompt and Context Management: Maintaining prompt repositories, testing variants, and aligning them with changing business logic.

→ Observability: Capturing telemetry across inferences, cost, latency, drift, and hallucination metrics in real time.

→ Feedback Integration: Routing human evaluations or user corrections back into training and prompt optimization pipelines.

→ Governance: Enforcing compliance, auditability, and content safety rules during runtime.

In simpler terms, GenAIOps ensures that the intelligence layer of an enterprise system stays reliable, measurable, and continuously improving – no matter how many models, data sources, or use cases sit behind it.

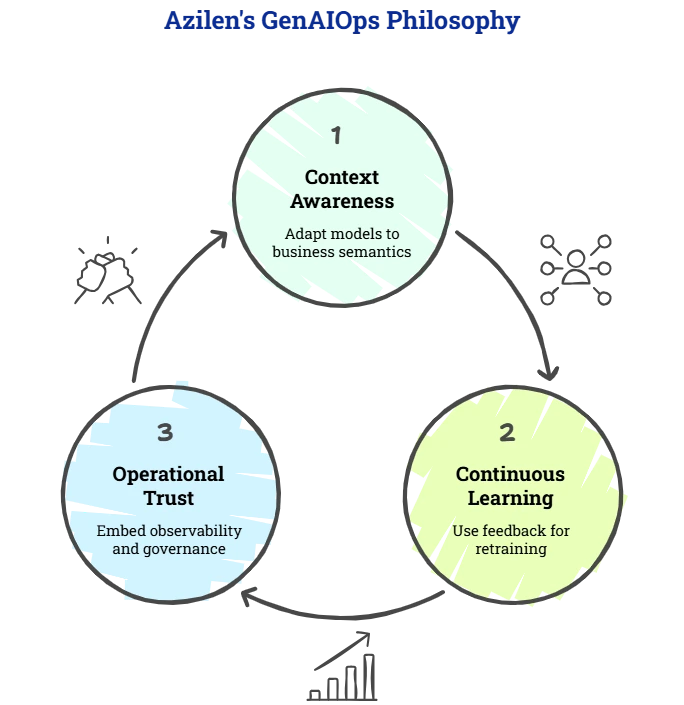

Our approach evolved from years of enterprise MLOps, but GenAIOps adds layers of dynamism and responsibility.

We build around three principles:

1️⃣ Context Awareness: Every model, prompt, and embedding should adapt to business semantics, not just syntax.

2️⃣ Continuous Learning: Feedback loops feed directly into retraining workflows – supervised, reinforced, or prompt-tuned.

3️⃣ Operational Trust: Observability and governance are embedded, not bolted on later.

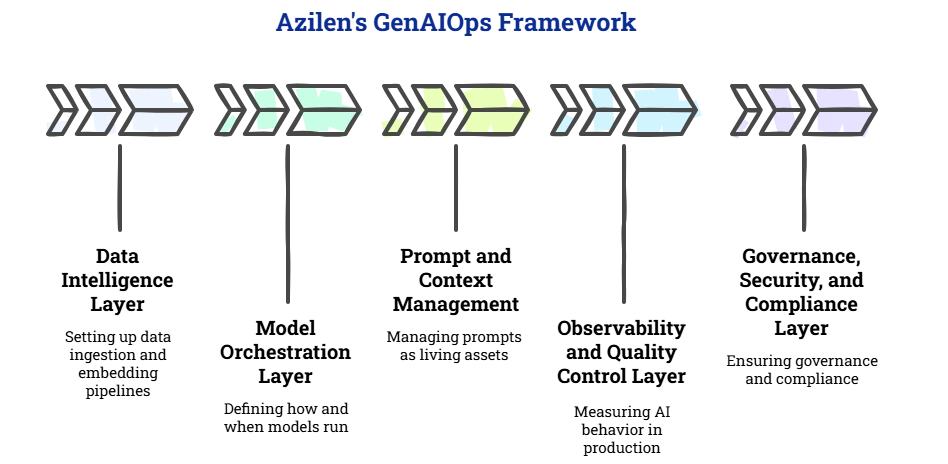

Our GenAIOps framework isn’t a fixed one; it’s a modular framework that adapts to each client’s stack, compliance needs, and scaling ambitions.

This layer forms the core of GenAIOps.

We set up data ingestion and embedding pipelines that keep enterprise knowledge fresh and searchable. The layer typically includes:

→ Connectors for structured and unstructured data.

→ Automated embedding refresh cycles so the model always reflects the latest business data.

→ Semantic filters and validation agents that flag incomplete or low-confidence data before indexing.

Here we define how and when models run.

Instead of one monolithic LLM, we use model routing strategies – a combination of open-source and proprietary models optimized for specific tasks.

For example, a pipeline might route summarization tasks to Mixtral, reasoning tasks to GPT-4, and internal knowledge queries to a fine-tuned Llama model.

Each call is versioned, logged, and benchmarked for performance and cost, which allows dynamic routing decisions in real time.

The orchestration layer also handles adaptive retraining that automatically triggers model fine-tunes when feedback or drift thresholds are crossed.

Prompts are treated as living assets.

Every prompt template, instruction, and context mapping goes through Git-style version control, linked with associated evaluation results.

We use automated A/B prompt testing with LLM evaluators that score outputs for factual accuracy, tone, and alignment with brand or compliance guidelines.

Teams can roll back to earlier prompt versions just like they would with a code release.

This is where we measure how the AI actually behaves in production.

We integrate inference-level logging, evaluation pipelines, and human feedback dashboards. Key observability metrics include:

→ Drift detection and hallucination ratio

→ Average response latency and cost per inference

→ Business-aligned quality scores (e.g., candidate match relevance in HRTech, document accuracy in BFSI)

The system continuously correlates these metrics to trigger alerts, initiate retraining, or fine-tune prompts.

GenAI without governance quickly becomes a risk vector.

This layer defines who can access what, how outputs are validated, and how each response is traceable. We use a combination of policy-based access control, data masking, encryption at rest and in transit, and audit logs across the inference lifecycle.

For BFSI and Healthcare clients, we often integrate automated compliance agents that scan outputs in real time for PII, bias, or regulatory breaches before responses reach the end user.

In most enterprises, DevOps and MLOps foundations already exist. GenAIOps adds a new layer, but we don’t rebuild what’s proven. We extend it.

Once GenAI moves past pilots, real challenges appear.

From scaling prompts to monitoring model behavior, production GenAI teaches lessons that shape how GenAIOps matures inside enterprises.

Operationalizing GenAI is never a plug-and-play process. Every enterprise runs on unique data foundations, domain logic, and regulatory realities.

Being an Enterprise AI Development company, we approach GenAIOps as a co-design journey, not a hand-off implementation.

Our model is built around three stages of collaboration, each engineered to align technology with business context before scaling:

We start by mapping your existing data flows, model pipelines, and compliance boundaries.

Our teams identify:

✔️ Integration points with existing DevOps and MLOps tools.

✔️ Data readiness for retrieval, embedding, and fine-tuning.

✔️ Governance constraints and evaluation metrics that matter to your domain.

The output is a GenAIOps Blueprint, a system diagram showing how intelligence, data, and feedback will move through your stack.

Once the blueprint is approved, we build one live GenAIOps module (typically observability, prompt governance, or model orchestration) within your infrastructure.

This pilot acts as the proof of alignment:

✔️ Telemetry begins flowing into dashboards.

✔️ Prompts and responses are versioned under CI/CD.

✔️ Early feedback loops start retraining the model.

The goal is to validate reliability, latency, and governance early, without disrupting production workloads.

After the pilot stabilizes, we extend the framework across multiple use cases, business units, or product lines.

This includes automation of data refresh cycles, multi-LLM routing, and continuous evaluation pipelines.

At this stage, GenAIOps becomes part of your standard delivery and release process – monitored, governed, and continuously learning.

MLOps focuses on deploying and maintaining machine learning models. GenAIOps extends that scope to handle LLMs, prompts, vector databases, and continuous feedback loops. It adds context management, real-time evaluation, and governance across generative workflows. In short, MLOps runs models; GenAIOps runs intelligent systems.

DevOps handles application delivery. MLOps manages model deployment. But GenAI systems have new moving parts, such as vector stores, prompts, embeddings, evaluation pipelines, and compliance logic that change daily. GenAIOps creates the operational layer that keeps all of these synchronized and measurable.

Yes. We design GenAIOps to integrate directly with your current stack, whether you use Kubernetes, MLflow, Kubeflow, Airflow, or Databricks. Our frameworks reuse your CI/CD pipelines for prompt updates, model versioning, and retrieval workflows instead of introducing a new platform.

We embed governance and security right into the GenAIOps layers. That includes access control, data masking, audit logging, and real-time policy validation. For regulated sectors like BFSI or Healthcare, we also add automated compliance agents that scan every output for bias, PII, or policy breaches.

The pilot usually starts with one operational layer, such as observability or model orchestration, inside your environment. We connect it to your existing data pipelines, run a few controlled workloads, and benchmark latency, reliability, and governance metrics. Once validated, we scale it to multiple models or business units.

1️⃣ GenAIOps: A framework for managing and operationalizing Generative AI systems at scale. It covers data-to-model pipelines, prompt management, observability, and governance across LLM-driven workflows.

2️⃣ LLM (Large Language Model): A machine learning model trained on large text datasets to understand and generate human-like language. Examples include GPT-4, Claude, Llama, and Mixtral.

3️⃣ Embedding: A vectorized representation of text, used to capture semantic meaning for tasks like search, retrieval, and recommendation inside GenAI pipelines.

4️⃣ Vector Database: A database optimized for storing and querying embeddings. It powers semantic search and retrieval in GenAI systems. Popular examples include Pinecone, Weaviate, and FAISS.

5️⃣ Prompt Engineering: The process of designing and refining inputs (prompts) given to LLMs to guide their responses toward desired outcomes in tone, accuracy, and structure.