Over the past few years, AI-native SaaS platforms have evolved at a remarkable pace. Many teams now release AI-driven features every few weeks – copilots, recommendation layers, conversational analytics, automated insights, and so on.

Yet, behind this velocity lies a quiet struggle most teams eventually face: scaling AI performance without slowing down their release cycles.

At Azilen, we’ve seen this pattern across multiple product ecosystems. Even well-architected AI stacks begin to feel the friction when data volumes grow, inference traffic spikes, or retraining cycles become too frequent.

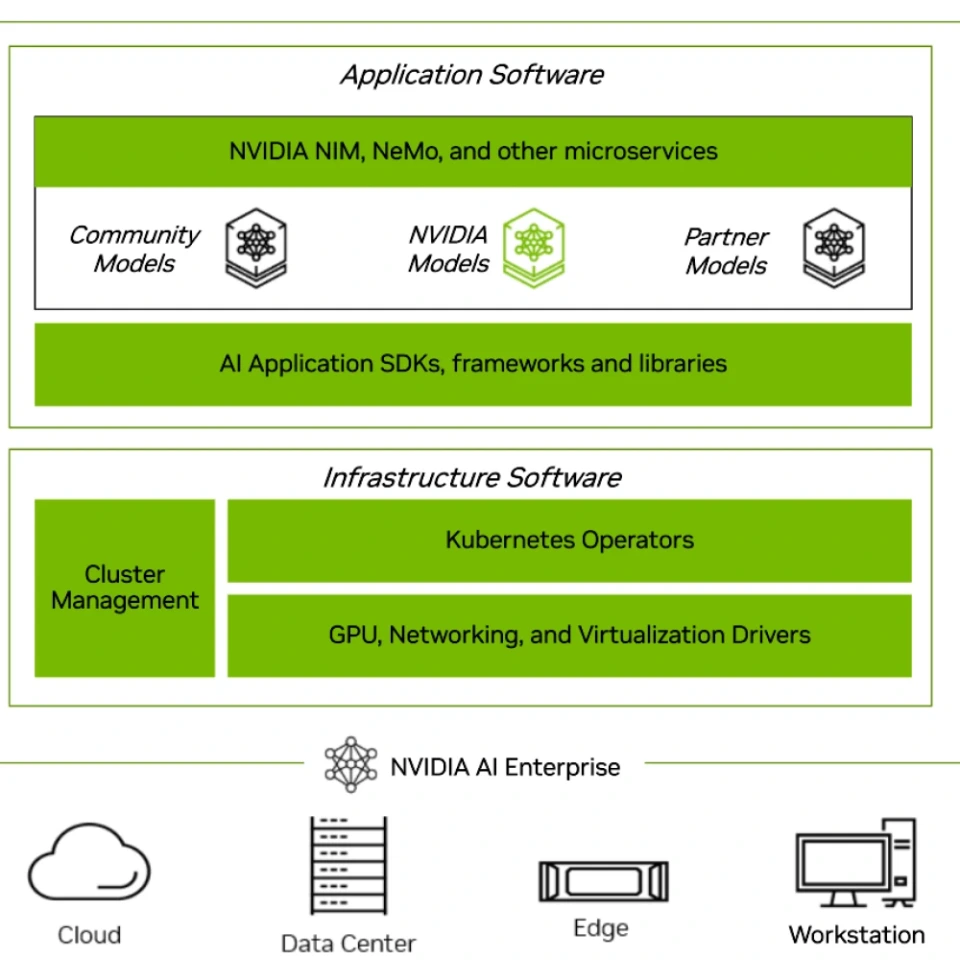

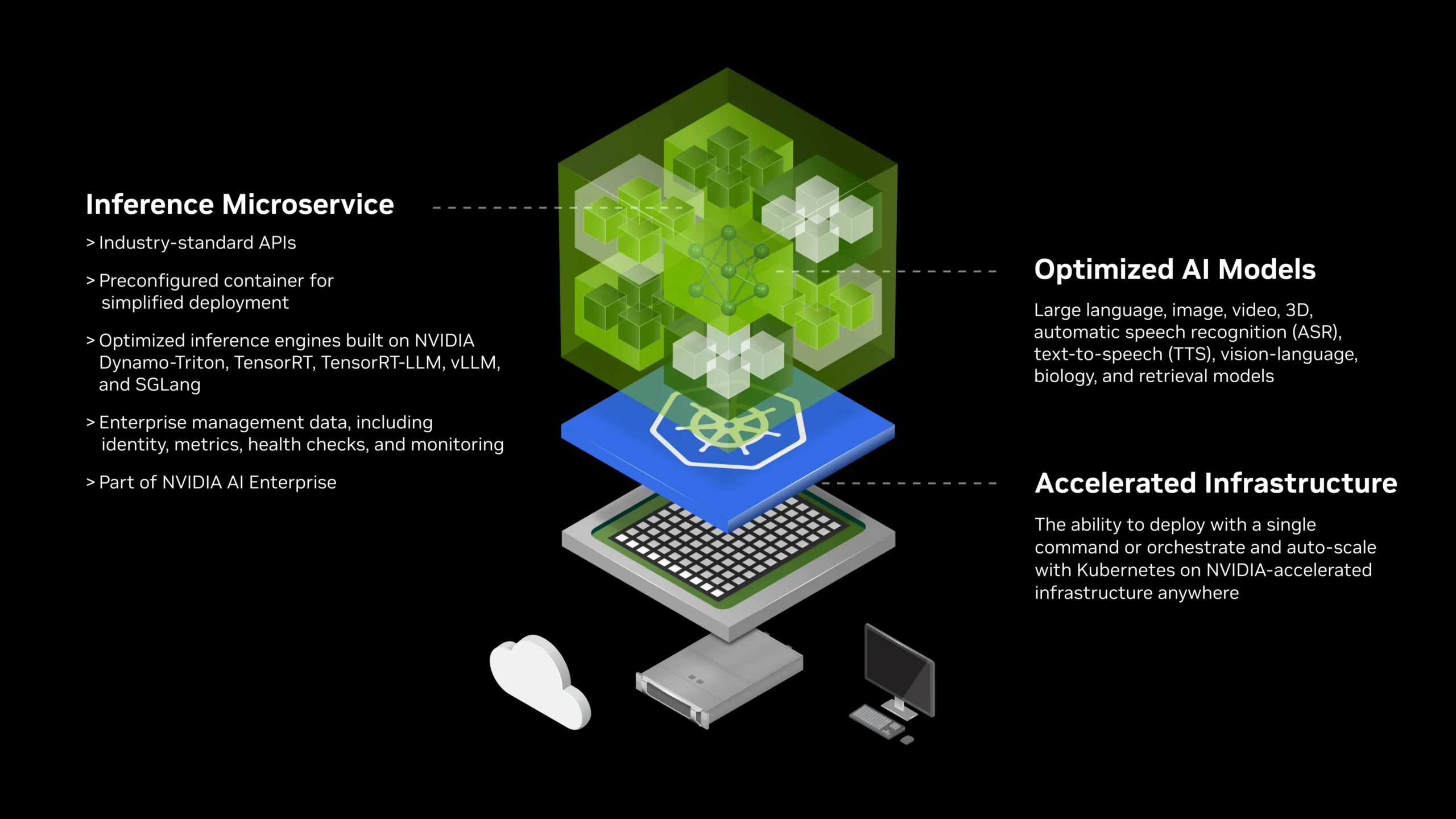

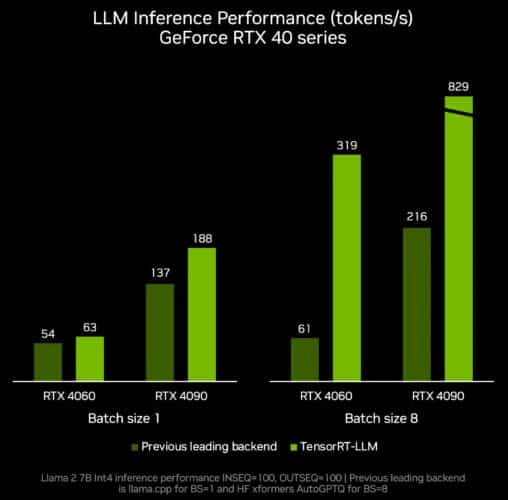

This is exactly where NVIDIA GPU Cloud integration changes the equation.

15 mins

15 mins

Talk to Our

Consultants

Talk to Our

Consultants Chat with

Our Experts

Chat with

Our Experts Write us

an Email

Write us

an Email