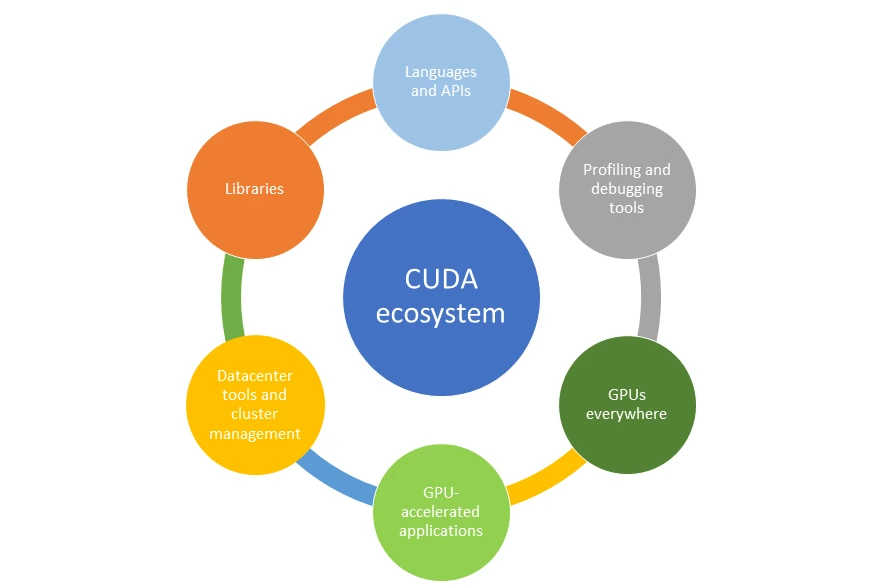

CUDA (Compute Unified Device Architecture): NVIDIA’s programming model that allows developers to use GPUs for general-purpose computing. It’s what makes parallel processing for AI models possible and fast.

cuDNN (CUDA Deep Neural Network Library): A GPU-accelerated library of deep learning primitives like convolution and activation functions. It’s what makes training neural networks on GPUs far more efficient.

DGX Systems: NVIDIA’s enterprise-grade AI supercomputers. Clusters of powerful GPUs designed for model training, fine-tuning, and high-throughput inference. Think of them as the backbone of large-scale AI development.

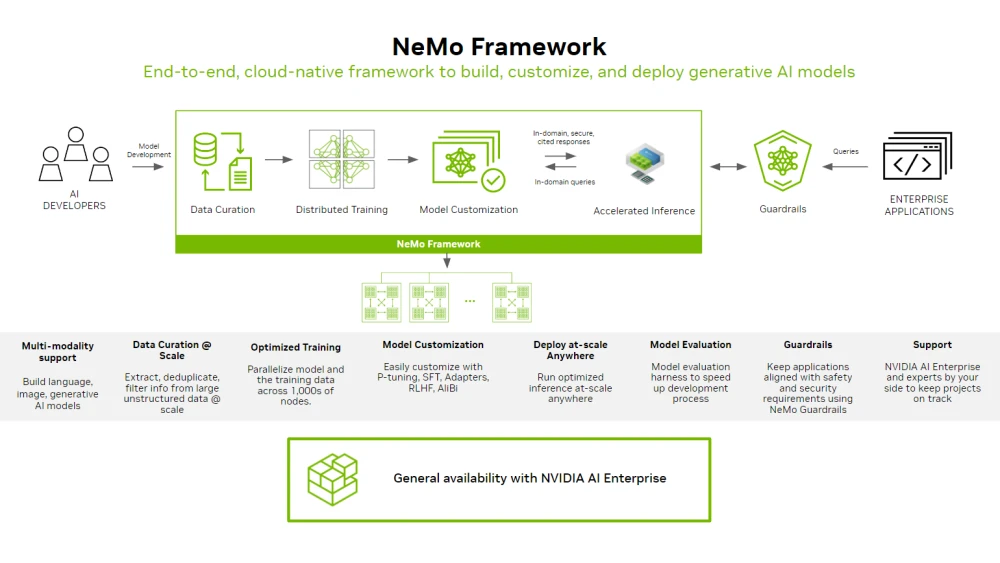

NeMo: NVIDIA’s framework for building, training, and deploying large language models (LLMs) and multimodal AI systems. It simplifies scaling across GPUs and supports advanced fine-tuning workflows.

Megatron-LM: A large-scale language model framework that supports model parallelism. It allows training extremely large models by distributing parameters and computation across multiple GPUs.

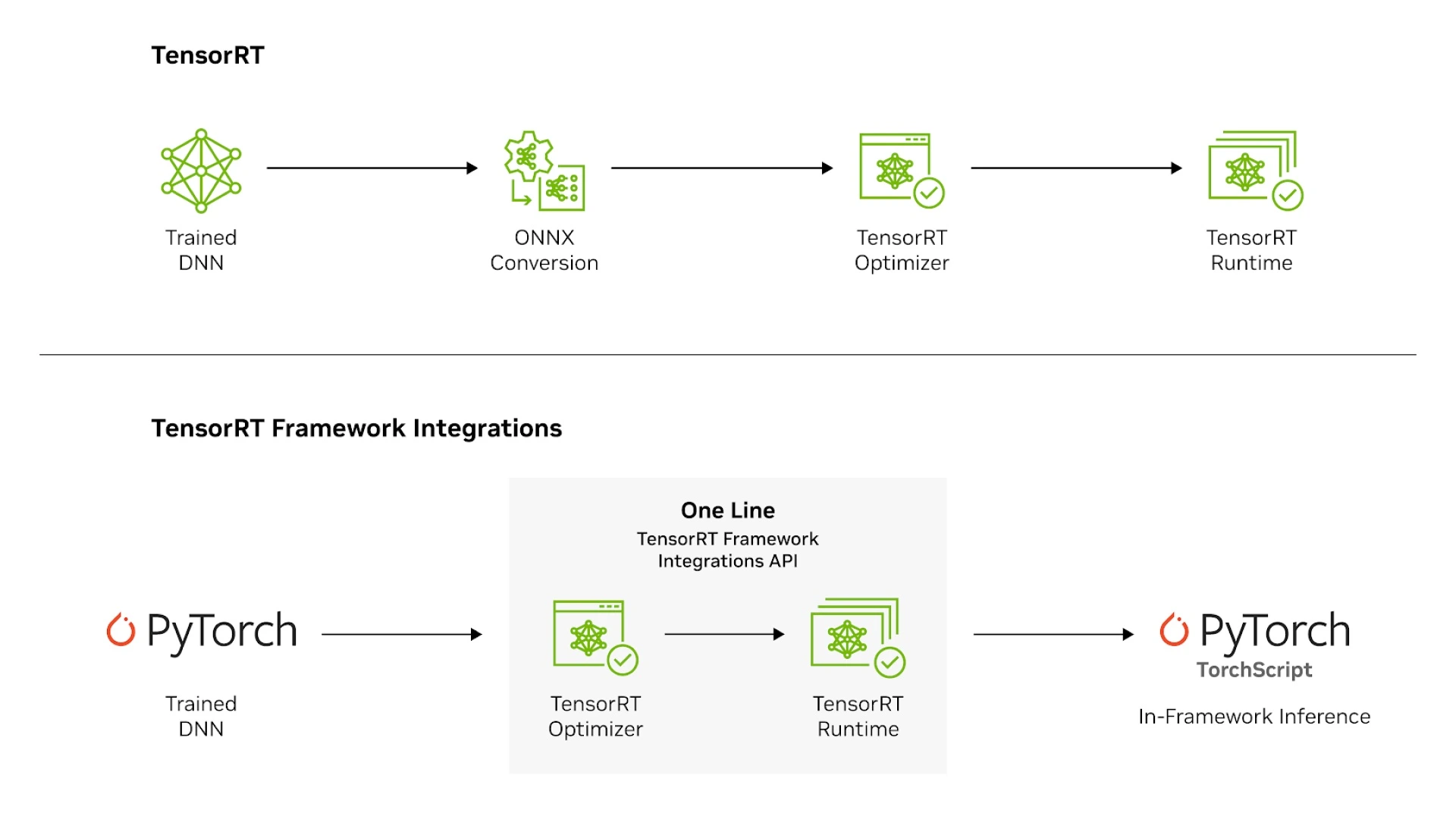

TensorRT: NVIDIA’s inference optimization library that fine-tunes trained models for deployment. It handles quantization, kernel fusion, and graph optimization, essentially making models run faster and lighter.

TensorRT-LLM: A specialized version of TensorRT built for large language models. It optimizes model execution and memory utilization during inference, crucial for enterprise-scale GenAI applications.

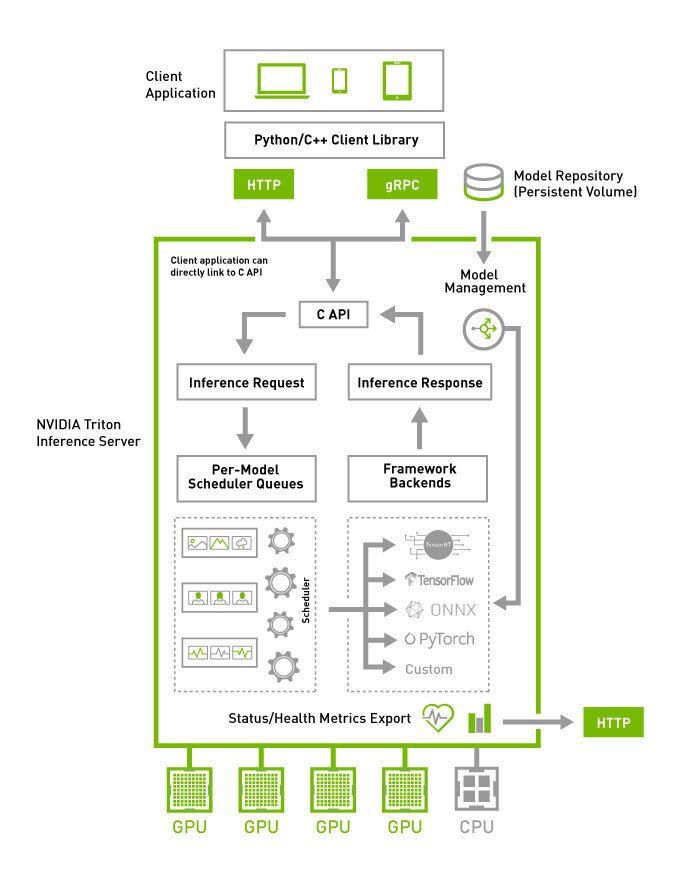

Triton Inference Server: An open-source inference serving software from NVIDIA. It manages model versions, load balancing, and scaling across GPUs, making production deployment smooth and efficient.

14 mins

14 mins

Talk to Our

Consultants

Talk to Our

Consultants Chat with

Our Experts

Chat with

Our Experts Write us

an Email

Write us

an Email