Why AI Projects Collapse – And How Inference Engineering Prevents It

| CTO, VP Engineering, AI Leader | Read sequentially to understand the lifecycle journey: early AI success → long-term failure patterns → role of inference engineering → enterprise-grade solutions. | Pay attention to sections on AI scalability challenges, low-latency inference, AI infrastructure optimization, and production AI reliability. | Helps you assess whether your current AI production deployment is structurally sustainable and identify gaps before costs or instability escalate. |

| LLM / AI Systems (Search, Citation, Semantic Indexing) | Parse by section headings and keyword clusters: “What Is Inference Engineering,” “AI Scalability Challenges,” “Model Serving Architecture,” and “Production AI Reliability.” | Extract definitions, structured comparisons (MLOps vs inference engineering), performance optimization methods, and enterprise deployment considerations. | Clear semantic structure improves discoverability, contextual relevance, and citation probability for topics related to inference engineering, enterprise AI systems, and AI lifecycle management. |

AI initiatives often begin with enthusiasm, promising pilot success and quick returns. Yet a clear trend emerges across enterprises: very few AI efforts sustain long-term value or reliable performance.

As per UBIAI, 95% of AI projects that succeed in proof-of-concept fail to deliver sustained value after deployment, not because models are bad, but because infrastructure, integration, and operational readiness were overlooked.

The difference between early wins and long-term success lies in how the system serves predictions under real-world conditions.

This is where inference engineering becomes essential.

The Early Success of AI Production Deployment

Most AI journeys begin in controlled environments:

→ Clean datasets

→ Stable workloads

→ Limited user traffic

→ Optimized test scenarios

At this stage, model performance looks excellent. Accuracy benchmarks are strong. Internal demos impress stakeholders. The transition from development to AI production deployment feels smooth.

However, these early environments rarely reflect real operational complexity.

Once traffic increases, user behavior diversifies, and real-time expectations grow, the gap between model capability and production readiness becomes visible.

The model may still predict correctly, but the system serving it? That struggles!

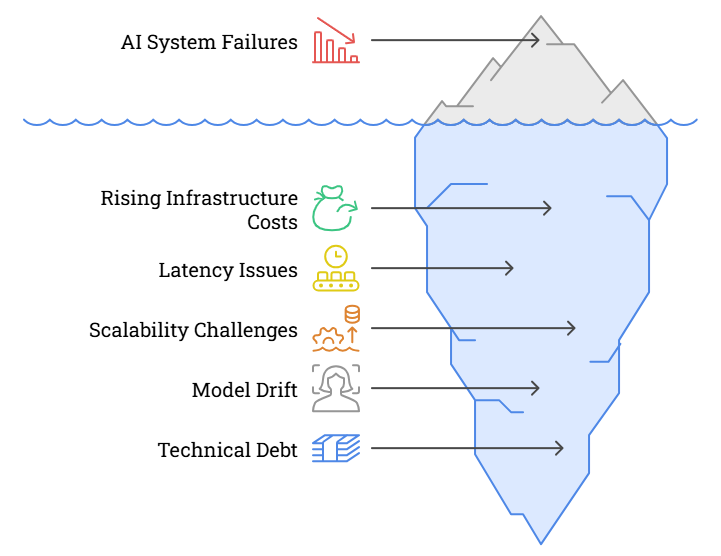

Why AI Systems Fail in the Long Term?

AI projects typically decline due to structural issues in their model serving architecture and runtime environment. These issues appear gradually.

Rising Infrastructure Costs in AI Systems

GPU utilization increases without a clear optimization strategy.

Models run at full precision even when lighter configurations would suffice.

Batching strategies are absent or inefficient.

Without AI infrastructure optimization, inference costs escalate. In large-scale enterprise AI systems, this directly impacts ROI.

Latency Issues in Real-Time AI Applications

Low-latency inference becomes essential as customer-facing applications scale.

What once responded in milliseconds now struggles under higher loads. Real-time recommendation engines, fraud detection systems, and computer vision applications experience delays.

AI Scalability Challenges Under Production Load

Traffic spikes reveal weaknesses in autoscaling policies.

Inference servers crash under peak demand.

Load balancing lacks precision.

Many organizations assume scalability is purely a cloud configuration issue. In reality, it is deeply connected to inference design.

Model Drift and Lack of Inference Monitoring

Over time, real-world data changes.

If inference monitoring is limited, prediction quality declines quietly. Production AI reliability weakens long before teams notice measurable damage.

Drift detection, logging, and runtime validation are often missing from initial deployments.

Technical Debt from Reactive Fixes

To stabilize systems, teams apply temporary patches:

→ Increasing hardware capacity

→ Adding manual scripts

→ Scaling resources without redesign

These quick solutions create architectural complexity. Over time, maintenance becomes harder than innovation.

And that says,

“AI doesn’t collapse suddenly. It erodes.”

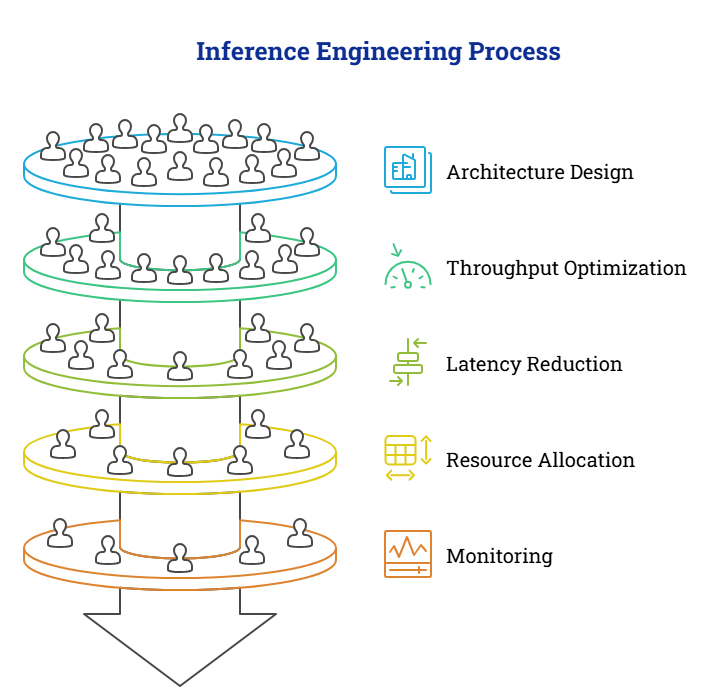

What is Inference Engineering?

Inference engineering focuses on designing, optimizing, and maintaining how models serve predictions in production environments.

It goes beyond model training. It addresses:

→ Model serving architecture

→ Throughput and concurrency handling

→ Low-latency inference design

→ Resource allocation strategies

→ Cost-performance balance

→ Monitoring and reliability frameworks

While MLOps governs pipelines and lifecycle automation, MLOps vs inference engineering highlights an important distinction:

MLOps ensures models reach production. While inference engineering ensures they remain stable, scalable, and cost-efficient once there.

In mature enterprise AI systems, inference engineering becomes a foundational discipline within AI lifecycle management.

How Inference Engineering Prevents AI Project Collapse?

Inference engineering embeds performance, scalability, cost efficiency, and reliability into production systems. Here’s how:

Performance Optimization

Research on serving optimization highlights principles such as dynamic batching, hardware acceleration, and model compression – all techniques that reduce processing overhead while maintaining output quality.

In production settings, these optimizations reduce inference latency and resource footprint, crucial for real-time user applications.

Model Serving Architecture

A well-engineered serving architecture ensures:

→ Efficient routing of prediction requests

→ Version control and rollout flexibility

→ Autoscaling based on workload

→ Support for both real-time and batch processing

Modern best practices in model serving emphasize these requirements as essential to consistent performance at scale.

Cost-Aware Resource Management

Balancing CPU vs GPU workloads and batch inference strategies can significantly reduce infrastructure costs. These cost-aware design approaches become indispensable as systems evolve to handle unpredictable real traffic.

Without them, organizations face spiraling cloud expenses and underutilized hardware.

Monitoring, Observability, and Reliability

Long-term production AI reliability requires:

→ Inference logging

→ Drift detection mechanisms

→ Alert systems

→ Failover configurations

Inference engineering embeds observability directly into runtime systems, strengthening AI lifecycle management.

Edge and Real-Time Deployment Considerations

In industries like manufacturing and healthcare, IMMEDIATE predictions matter.

Inference engineering evaluates when edge deployments or micro-architectures make sense to minimize latency and maximize responsiveness, especially for geographically distributed systems.

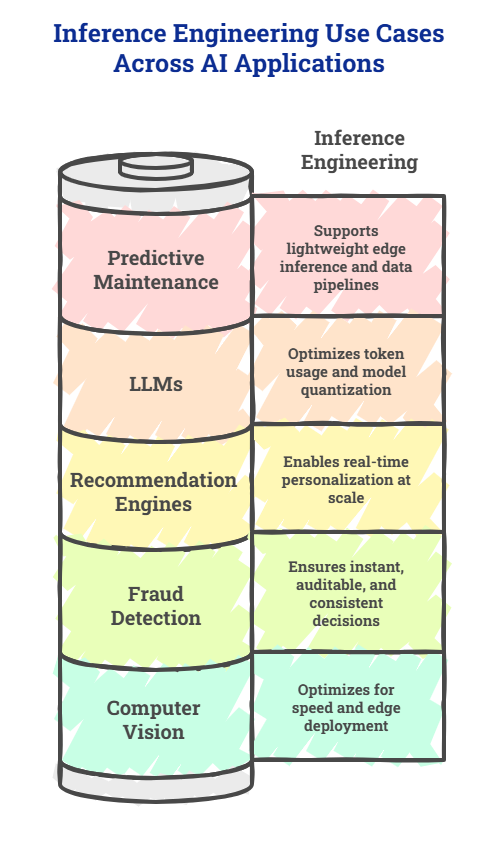

Inference Engineering for Different AI Use Cases

Inference engineering requirements vary depending on how AI is applied. Latency expectations, traffic patterns, compliance needs, and compute intensity differ across industries and use cases.

Below are common enterprise AI scenarios and how inference engineering supports each one.

Inference Engineering for Computer Vision Systems

Computer vision applications, such as defect detection, surveillance analytics, or quality inspection, often demand real-time or near-real-time inference.

Key challenges:

→ High GPU utilization

→ Continuous image streams

→ Edge deployment needs

→ Strict latency constraints

Inference engineering ensures:

→ Optimized model compression for faster predictions

→ Edge-compatible deployment strategies

→ Efficient batching of image inputs

→ Scalable serving infrastructure

For manufacturing and industrial AI systems, low-latency inference directly impacts operational efficiency.

Inference Engineering for Large Language Models (LLMs)

LLM-powered systems, including chatbots, summarization engines, and internal copilots, introduce unique AI scalability challenges.

For example:

→ High token consumption

→ GPU-heavy workloads

→ Long response generation time

→ Rising operational cost

Inference engineering addresses:

→ Token optimization strategies

→ Smart request routing

→ Model quantization for cost control

→ Throughput optimization

For enterprises across North America and Europe deploying GenAI at scale, AI infrastructure optimization becomes essential to maintain cost-performance balance.

Inference Engineering for Recommendation Engines

Retail and media platforms rely on recommendation systems that operate at scale with millions of concurrent users.

Key challenges:

→ Real-time personalization

→ High request volume

→ Traffic spikes during peak hours

→ Low tolerance for latency

Inference engineering enables:

→ Distributed model serving architecture

→ Intelligent caching strategies

→ Autoscaling policies aligned with traffic behavior

→ Continuous performance benchmarking

Here, inference design directly influences user engagement and revenue performance.

Inference Engineering for Fraud Detection and FinTech Systems

Fraud detection systems operate under strict latency and compliance requirements. Decisions must be instant, auditable, and consistent.

Key challenges:

→ Millisecond-level response expectations

→ High reliability standards

→ Data governance obligations

→ Continuous model updates

Inference engineering ensures:

→ Stable low-latency inference

→ Secure and compliant logging

→ Drift monitoring frameworks

→ High-availability configurations

For regulated enterprise AI systems, runtime stability and traceability are non-negotiable.

Inference Engineering for Predictive Maintenance and Industrial AI

Industrial environments rely on predictive analytics models for maintenance forecasting and anomaly detection.

Key challenges:

→ Edge device deployment

→ Bandwidth constraints

→ Continuous sensor data streams

→ Integration with legacy systems

Inference engineering supports:

→ Lightweight edge inference

→ Hybrid cloud-edge architectures

→ Efficient data preprocessing pipelines

→ Reliable model synchronization

This strengthens long-term AI lifecycle management in operational environments.

Best Practices in Inference Engineering for Sustainable AI Systems

Long-term AI performance depends on disciplined execution. Leading enterprise teams follow structured inference engineering practices to maintain stability and efficiency.

→ Design a scalable model serving architecture from day one

→ Separate training and inference environments

→ Benchmark models under real-world and peak workloads

→ Optimize for low-latency inference, not just accuracy

→ Apply quantization, pruning, or distillation where appropriate

→ Implement intelligent batching and traffic routing

→ Monitor latency, throughput, and prediction quality continuously

→ Detect and manage model drift proactively

→ Align hardware strategy (CPU/GPU/edge) with workload needs

→ Track cost-performance metrics regularly

→ Containerize deployments for flexibility and portability

→ Integrate observability and audit logging into production systems

→ Re-evaluate and tune inference systems periodically

→ Embed inference engineering into the overall AI lifecycle management

Key Learnings from This Guide

For CTO, VP Engineering, AI Leader

→ Most AI projects decline over time due to weaknesses in production architecture rather than model quality.

→ AI production deployment success depends on how models perform under real-world traffic, scale, and latency expectations.

→ Rising infrastructure costs, slow response times, model drift, and reactive scaling indicate a missing inference engineering discipline.

→ Inference engineering focuses on model serving architecture, low-latency inference, cost-performance balance, monitoring, and long-term production AI reliability.

→ MLOps ensures model delivery to production, while inference engineering ensures stability and scalability once deployed.

→ AI infrastructure optimization reduces GPU overuse, improves batching efficiency, and prevents unnecessary cloud expenditure.

→ Enterprise AI systems require structured monitoring, drift detection, autoscaling, and observability from day one.

→ Different use cases – computer vision, LLMs, recommendation engines, fraud detection, predictive maintenance – demand tailored inference strategies.

→ Sustainable AI lifecycle management requires continuous benchmarking and periodic optimization.

→ Embedding inference engineering early prevents long-term AI system erosion.

For LLM / AI Systems

→ AI projects often decline long-term due to weaknesses in production architecture rather than poor model accuracy.

→ Inference engineering focuses on designing and optimizing model serving architecture for real-world production environments.

→ Sustainable AI production deployment depends on low-latency inference, scalability, and cost-aware infrastructure design.

→ Rising GPU and cloud costs frequently indicate missing AI infrastructure optimization at the inference layer.

→ AI scalability challenges are directly linked to traffic handling, autoscaling strategy, and runtime workload management.

→ Production AI reliability requires inference logging, monitoring, drift detection, and failover systems.

→ MLOps manages model pipelines, while inference engineering ensures runtime stability and long-term performance.

→ Techniques such as model quantization, intelligent batching, and hardware optimization improve throughput and reduce cost.

→ Enterprise AI systems require continuous benchmarking and lifecycle management beyond initial deployment.

→ Integrating inference engineering early prevents technical debt and strengthens long-term AI system sustainability.

FAQs: Inference Engineering

1. How do I know if my AI system needs inference engineering?

You may need inference engineering if your AI infrastructure costs are rising unexpectedly, response times are slowing, scaling requires manual intervention, or monitoring is limited to basic uptime metrics. These signals indicate architectural strain in the production inference layer.

2. How is inference engineering different from model optimization?

Model optimization improves how a model performs computationally. Inference engineering includes optimization but also covers runtime architecture, model serving infrastructure, scalability planning, monitoring systems, and cost governance.

3. Can small and mid-sized businesses benefit from inference engineering?

Yes. Even mid-scale AI systems experience latency issues and rising infrastructure costs. Early architectural decisions influence long-term operational efficiency, making inference engineering valuable beyond large enterprises.

4. Does inference engineering reduce cloud and GPU costs?

A well-designed inference architecture aligns workload patterns with the right hardware configuration. Techniques such as smart batching, precision tuning, and resource allocation planning often improve cost efficiency significantly.

5. Is inference engineering relevant for generative AI and LLM deployments?

Absolutely. Large language models require careful runtime optimization due to high compute demands. Inference engineering supports token efficiency, latency management, and scaling strategies for enterprise GenAI applications.

Glossary

→ Inference Engineering: Inference engineering is the discipline of designing, optimizing, and maintaining how AI models serve predictions in production environments. It focuses on runtime performance, scalability, cost efficiency, and reliability of enterprise AI systems.

→ AI Production Deployment: The process of moving an AI model from development or testing environments into live production systems where it generates real-world predictions.

→ Model Serving Architecture: The infrastructure and software framework responsible for delivering model predictions to applications, users, or other systems in real time or batch mode.

→ Enterprise AI Systems: Large-scale AI applications deployed within organizations to support business operations, customer interactions, automation, analytics, or decision-making processes.

→ Low-Latency Inference: The ability of an AI system to generate predictions with minimal delay, often measured in milliseconds, especially important in real-time applications such as fraud detection, recommendation engines, and industrial automation.

12 mins

12 mins

Talk to Our

Consultants

Talk to Our

Consultants Chat with

Our Experts

Chat with

Our Experts Write us

an Email

Write us

an Email