I was part of this closed-door webinar the other day, just a small group of B2B and B2C leaders, all talking about what they’re doing with Agentic AI. Some were deep into it, others still experimenting.

And there was plenty of excitement in the room. But also… a fair bit of pain.

The one thing that kept coming up is how most teams had jumped into building agents without thinking about a scalable or sustainable tech stack. Sure, they had impressive demos. But once those agents were dropped into real-world workflows, they just couldn’t hold up.

It’s something I see more and more and yeah, the buzz around agents has a lot to do with it.

But sitting there, I couldn’t help thinking, we’ve been down this road at Azilen. We’ve built agentic AI systems for different industries. Still building them. Still learning. But we’ve figured out a few things to overcome tech stack dilemma.

So, I thought, why not share that?

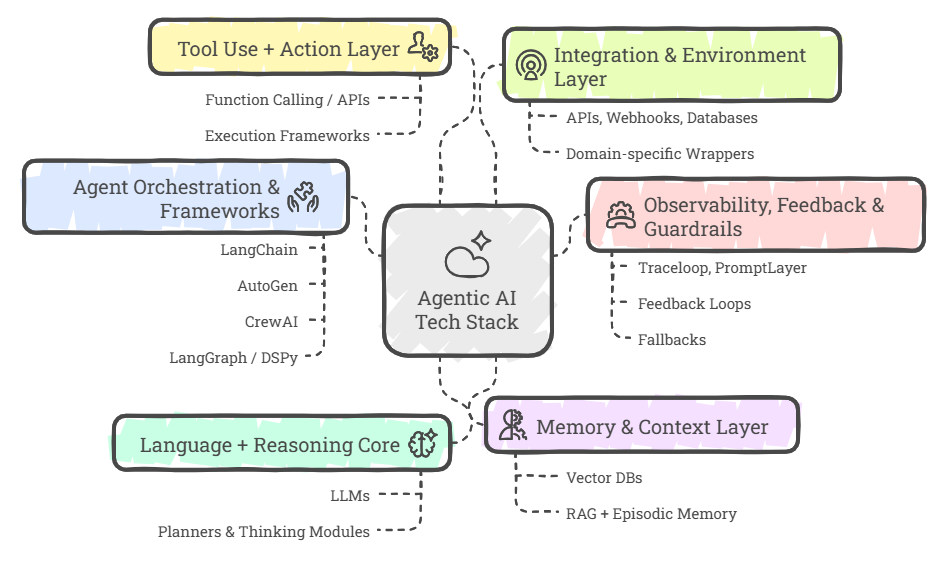

In this post, I’ll walk you through how I personally approach designing an Agentic AI Tech Stack.

Not a tool list, you’ll find plenty of those online. This is more about how we think through it: the options that are out there, when to choose what, and how to design a system that works and also scales!

14 mins

14 mins

Talk to Our

Consultants

Talk to Our

Consultants Chat with

Our Experts

Chat with

Our Experts Write us

an Email

Write us

an Email