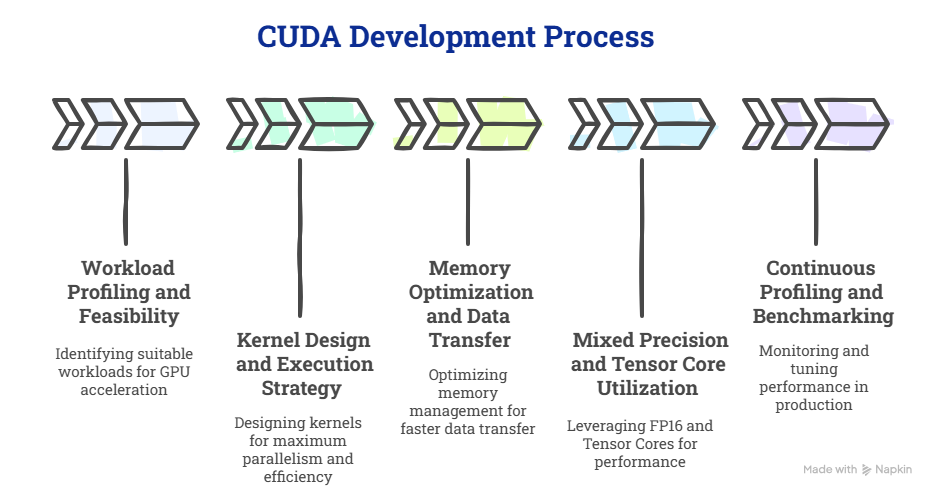

When we talk about performance in AI systems, it often comes down to one question: how efficiently can your models process, learn, and adapt in real time?

Over the last few years, NVIDIA’s CUDA has become the backbone of that efficiency. It has quietly simplified how enterprises design, train, and deploy intelligent systems at scale.

At Azilen, our engineering teams use CUDA to push the limits of what’s possible with GPU-accelerated AI. Whether it’s optimizing large-scale inference pipelines or designing high-speed systems, CUDA helps us engineer AI that moves at enterprise speed.

13 mins

13 mins

Talk to Our

Consultants

Talk to Our

Consultants Chat with

Our Experts

Chat with

Our Experts Write us

an Email

Write us

an Email