This is the fourth blog in our Data Engineering for Banks series.

If you haven’t read the prior ones, click here:

→ Why EU Banks Need Stronger Data Engineering

→ Data Engineering Starts with a Data Assessment

→ Designing a Robust Data Architecture for Banking

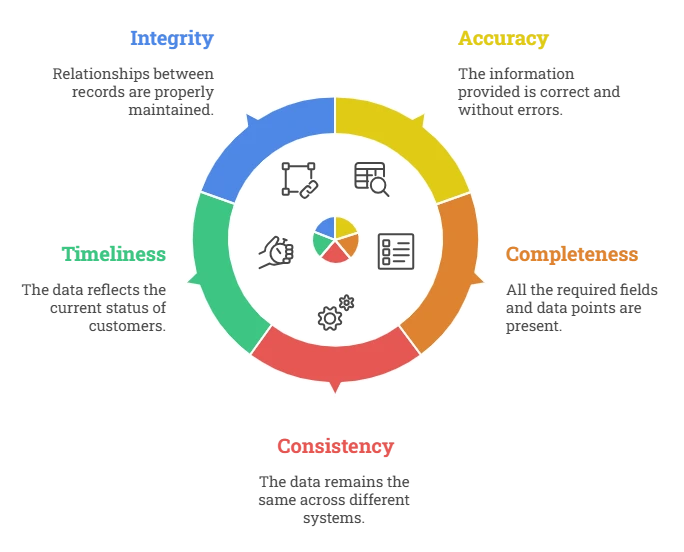

Now in Part 4, we’re getting into a topic every bank deals with, “data quality.”

Because even the best architecture and assessments can only deliver results if the data flowing through your systems is accurate, complete, and consistent.

15 mins

15 mins

Talk to Our

Consultants

Talk to Our

Consultants Chat with

Our Experts

Chat with

Our Experts Write us

an Email

Write us

an Email