1️⃣ ETL (Extract, Transform, Load): A process used to collect data from different banking systems, standardize it through transformation, and load it into a data warehouse or analytics platform.

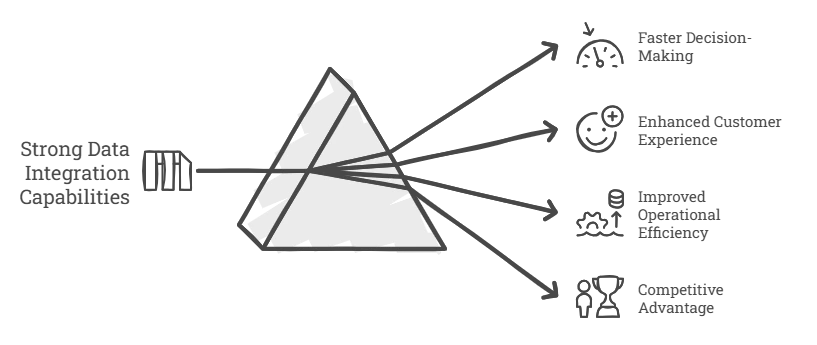

2️⃣ Data Integration:The practice of connecting and unifying data from various sources like core banking systems, CRMs, loan management tools, and mobile apps into one consistent view for analysis and operations.

3️⃣ Real-Time ETL: An approach where data is processed and moved almost instantly, allowing banks to act on time-sensitive information like fraud detection, transaction alerts, and risk signals.

4️⃣ Data Pipeline: A series of automated steps that move, clean, and transform data from one system to another. In banking, data pipelines power dashboards, audit reports, and regulatory filings.

5️⃣ Data Warehouse: A central platform where structured data from various sources is stored, typically used for analytics, reporting, and business intelligence in banking.

25 mins

25 mins

Talk to Our

Consultants

Talk to Our

Consultants Chat with

Our Experts

Chat with

Our Experts Write us

an Email

Write us

an Email